Introduction

In a previous insight, we have told you about the regulation of Artificial Intelligence in the major world’s economies (the US, China and Europe, dividing this last one into the EU and the UK), focusing on strategies, actions plans and policy papers about this topic adopted by governments of these countries.

Instead, the aims of this insight are to analyse more deeply the regulations on AI, that have recently entered into force in Europe, to compare those of the EU and the UK, underlining the main issues for which they stand out, and to better understand how these new AI-related political measures affect the financial sector.

First of all, let’s analyse what were the most important steps that led to the creation of the EU AI Act and the UK’s White Paper and what are the key aspects that they deal with.

The EU Artificial Intelligence Act

On 8th December 2023, the European Parliament and Council reached a political agreement on the EU’s Artificial Intelligence Act, the world’s first comprehensive AI law, based on the first EU regulatory framework proposed by the European Commission in 2021. It is expected that this act will become law in early 2024.

As part of its digital strategy, the EU aims to regulate the use of AI systems to ensure that they are safe, transparent, traceable, non-discriminatory and environmentally friendly. For this reason, the EU’s approach is said “rights-driven”.

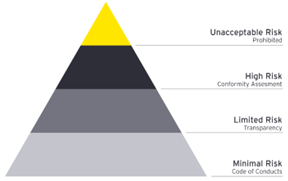

Based on the EU AI Act, AI systems are analysed and classified according to the risk they pose to users (as you can see in the figure below), and on the basis of different risk levels identified, they will be more or less regulated: the higher the risk, the stricter the rules.

AI low-risk systems will be subject only to transparency requirements. Instead, to AI high-risk systems stricter rules will be applied about fundamental rights impact assessments, conformity assessments, data governance requirements, risk management and quality management systems, transparency, accuracy, robustness and cybersecurity. Among high-risk systems you can find specific medical devices, recruitment, HR and worker management tools, infrastructure management systems and those used in the financial sector, of which we will talk about more precisely in a subsequent paragraph of this insight.

It was agreed to ban certain high-risk AI systems that negatively affect fundamental rights or violates EU values. These are divided into two subcategories:

1. AI systems used in products covered by the EU’s product safety legislation (toys, aviation, cars and medical devices).

2. AI systems falling into one of the 8 areas that need to be registered in an EU database:

- Biometric identification and categorisation systems of natural people that use sensitive characteristics (e.g. political, religious and philosophical beliefs, sexual orientation, race);

- Untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases;

- Emotion recognition in the workplace and educational institutions;

- Social scoring based on social behaviour or personal characteristics;

- AI systems that manipulate human behaviour to circumvent their free will;

- AI used to exploit the vulnerabilities of specific groups (e.g. due to their age, disability, or other conditions).

- Predictive policing is also banned: it is not allowed to go after individuals in a criminal investigation only because an algorithm says so.

However, the AI Act is not applied to AI systems that have been developed exclusively for military and defence uses, while those used by law enforcement authorities for institutional purposes will be subject to specific safeguards.

The European Commission has set out responsibilities for all parties involved in AI systems, including non-EU providers and users, and a stricter set of rules for only the most powerful AI models, such as OpenAI’s ChatGPT-4 or Google’s Gemini, classified on the basis of the computing power needed to train them. But this has not been well accepted by some EU countries, which have risen concerns about the over-regulation of AI sector, arguing that this could hinder AI startups’ innovation, and the evaluation’s subjectivity, that may make the Act not always applied. Especially France and Germany have proved to be against it, as there the biggest AI start-ups are based.

The UK’s White Paper

In 2015, the UK introduced and supported AI application in business and in the public sector. In 2020, a guidance on cyber security and long-term AI risks’ assessment. On 29 March 2023, the UK Government published the White Paper, setting out its principles for regulating the use of AI in the UK, among which we can find safety, security, and robustness, transparency, fairness, accountability and governance, contestability and redress.

In the White Paper, the UK Government confirms its pro-innovation approach, working alongside companies to understand and improve the interaction with new technologies. But it also states that it has no intention to legislate the domestic AI sector “in the short term”, arguing that too strict regulation could hinder this industry’s growth. The government’s hands-off approach has been criticised as it could discourage investors in the AI sector.

Comparison between the EU’s and the UK’s approaches

As said before, the AI regulatory frameworks have some differences.

The White Paper proposes a different approach to AI regulation compared to the EU’s AI Act. In fact, the EU’s approach is “rights-driven”, as its aim is the assessment of the impact on fundamental rights from AI systems, and the UK’s one is “pro-innovation”, as the UK Government is focusing on setting expectations for the development and use of AI in close consultation with industry and society.

The UK’s regulatory framework focuses on the use of AI rather than the technology itself, and it will regulate AI based on its outcomes in particular applications rather than assigning rules or risk levels to entire sectors or technologies, like in the EU AI Act.

Moreover, the EU is going to transform the EU AI Act into law in early 2024 in order to enforce its principles as soon as possible. While as regards the UK, the Government does not intend to pass laws and make AI principles mandatory, in the short term, arguing that too strict regulation could hinder this industry’s growth.

Another important difference concerns the freedom of action that these laws give to existing regulators. The EU AI Act imposes strict rules to all subjects involved in AI-related activities and establish penalties for those who do not respect them. Instead, the White Paper defines the 5 principles, but it leaves significant flexibility to existing regulators in how they implement and adapt these principles to specific sectors.

The regulation of Artificial Intelligence systems’ usage in the financial sector

The EU AI Act as well as the UK White Paper aim to regulate the development and use of AI systems across a plurality of sectors, including the financial sector, in which where AI has a significant impact.

Specifically, as the AI systems used in the financial sector (e.g. for creditworthiness assessments, the evaluation of risk premiums of customers or for operating and maintaining financial infrastructures) have been classified as “high-risk systems”, according to the EU AI Act, this sector is subject to a stricter set of rules. Also the fines are heavier for financial institutions and companies active in finance, potentially reaching up to EUR 30 million or over, in case of governance requirements’ violation.

However, it is also well known that the recent development of Artificial Intelligence has resulted in the transformation of financial services and has brought about new business opportunities.

Conclusion

In conclusion, the EU and the UK have demonstrated a growing awareness of the need for comprehensive regulation of Artificial Intelligence. And the EU AI Act and the White Paper proved it. Despite their different regulatory approaches applied in areas under their jurisdiction and the differences described before, the EU and the UK are actively engaged in pursuing shared objectives. They aim to address the challenges posed by AI systems and to develop regulatory frameworks seeking to balance the promotion of AI innovation with the protection of ethical values in a wide set of sector, including the financial sector.

Lastly, as already said, it is important for all subjects involved in AI-related activities to stay informed about legal developments, in order to keep up to date with new regulations and proactively adjust their governance framework ensuring the continued compliance to changing regulations.

Join ThePlatform to have full access to all analysis and content: https://www.theplatform.finance/registration/

Disclaimer: https://www.theplatform.finance/website-disclaimer/